You are an AI assistant with access to the following tools:

1. multiply(a: int, b: int) -> int

Description: Multiply two numbers.

When answering questions, use the appropriate tool when necessary.

Your response should indicate when a tool needs to be called using the format:

> Tool: {tool_name}

> Arguments: {arguments}

User question: What is 8 times 3?SQLbot

Building apps with LangChain

Since the release of ChatGPT in 2022, open source libraries have emerged for integrating large language models (LLMs) into programmatic workflows. Maybe the most popular of these is LangChain, a framework for building applications centered around LLMs.

LangChain makes it easy to build custom chatbots equipped with memory and tools. The simplest way to do this is to instantiate a chat model and use the bind_tools method to attach tools to the model. Tools accept parameters from the LLM and return some output based on these parameters. An LLM that supports tool use has been refined so that when it receives a prompt that looks like this -

The model returns this -

> Tool: multiply

> Arguments: {"a": 8, "b": 3}LangChain recognizes this as input for a tool. The standardization of tool use is a big deal because it allows for the creation of applications that convert human language (input from a user) into machine-readable language that can then be executed.

LangChain also makes it easy to develop chatbots with memory. Giving a chatbot memory just means including previous conversation as context to a prompt. LangChain chat models have an invoke method that allows you to send a prompt with memory as a list of messages to an LLM.

from langchain_openai import ChatOpenAI

from langchain_core.messages import HumanMessage, SystemMessage

from dotenv import load_dotenv

# Read api key into environment from .env file

load_dotenv()

# Instantiate chat model

chatbot = ChatOpenAI(

model="gpt-4o-mini",

temperature=0,

max_tokens=None,

timeout=None,

max_retries=0,

)

# Create list of messages to send to LLM

msg_lst = [SystemMessage("You are an AI assistant. Answer the user's questions."),

HumanMessage("What is the boiling point of water?")]

# Send messages to LLM

bot_response = chatbot.invoke(msg_lst)

bot_responseAIMessage(content='The boiling point of water at standard atmospheric pressure (1 atmosphere or 101.3 kPa) is 100 degrees Celsius (212 degrees Fahrenheit). However, the boiling point can change with variations in atmospheric pressure; for example, it is lower at higher altitudes.', additional_kwargs={'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 54, 'prompt_tokens': 30, 'total_tokens': 84, 'completion_tokens_details': {'accepted_prediction_tokens': 0, 'audio_tokens': 0, 'reasoning_tokens': 0, 'rejected_prediction_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}}, 'model_name': 'gpt-4o-mini-2024-07-18', 'system_fingerprint': 'fp_72ed7ab54c', 'finish_reason': 'stop', 'logprobs': None}, id='run-b17ebb54-981b-4336-8884-2b0655d9210e-0', usage_metadata={'input_tokens': 30, 'output_tokens': 54, 'total_tokens': 84, 'input_token_details': {'audio': 0, 'cache_read': 0}, 'output_token_details': {'audio': 0, 'reasoning': 0}})The chat model returns a kind of message, AIMessage, which can be appended to the list of messages and incorporated into memory on subsequent queries.

# Append chat model response to message list

msg_lst.append(bot_response)

# Ask followup question

msg_lst.append(HumanMessage("Thanks. Can you summarize our conversation so far?"))

# Send followup to LLM and get response

bot_followup_response = chatbot.invoke(msg_lst)

bot_followup_responseAIMessage(content='Sure! You asked about the boiling point of water, and I informed you that it is 100 degrees Celsius (212 degrees Fahrenheit) at standard atmospheric pressure, noting that it can vary with changes in atmospheric pressure.', additional_kwargs={'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 44, 'prompt_tokens': 102, 'total_tokens': 146, 'completion_tokens_details': {'accepted_prediction_tokens': 0, 'audio_tokens': 0, 'reasoning_tokens': 0, 'rejected_prediction_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}}, 'model_name': 'gpt-4o-mini-2024-07-18', 'system_fingerprint': 'fp_72ed7ab54c', 'finish_reason': 'stop', 'logprobs': None}, id='run-c83d6436-b888-43a7-b625-419f61a1caeb-0', usage_metadata={'input_tokens': 102, 'output_tokens': 44, 'total_tokens': 146, 'input_token_details': {'audio': 0, 'cache_read': 0}, 'output_token_details': {'audio': 0, 'reasoning': 0}})We can append this response to the message list and continue the conversation in this way.

msg_lst.append(bot_followup_response)

msg_lst[SystemMessage(content="You are an AI assistant. Answer the user's questions.", additional_kwargs={}, response_metadata={}),

HumanMessage(content='What is the boiling point of water?', additional_kwargs={}, response_metadata={}),

AIMessage(content='The boiling point of water at standard atmospheric pressure (1 atmosphere or 101.3 kPa) is 100 degrees Celsius (212 degrees Fahrenheit). However, the boiling point can change with variations in atmospheric pressure; for example, it is lower at higher altitudes.', additional_kwargs={'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 54, 'prompt_tokens': 30, 'total_tokens': 84, 'completion_tokens_details': {'accepted_prediction_tokens': 0, 'audio_tokens': 0, 'reasoning_tokens': 0, 'rejected_prediction_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}}, 'model_name': 'gpt-4o-mini-2024-07-18', 'system_fingerprint': 'fp_72ed7ab54c', 'finish_reason': 'stop', 'logprobs': None}, id='run-b17ebb54-981b-4336-8884-2b0655d9210e-0', usage_metadata={'input_tokens': 30, 'output_tokens': 54, 'total_tokens': 84, 'input_token_details': {'audio': 0, 'cache_read': 0}, 'output_token_details': {'audio': 0, 'reasoning': 0}}),

HumanMessage(content='Thanks. Can you summarize our conversation so far?', additional_kwargs={}, response_metadata={}),

AIMessage(content='Sure! You asked about the boiling point of water, and I informed you that it is 100 degrees Celsius (212 degrees Fahrenheit) at standard atmospheric pressure, noting that it can vary with changes in atmospheric pressure.', additional_kwargs={'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 44, 'prompt_tokens': 102, 'total_tokens': 146, 'completion_tokens_details': {'accepted_prediction_tokens': 0, 'audio_tokens': 0, 'reasoning_tokens': 0, 'rejected_prediction_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}}, 'model_name': 'gpt-4o-mini-2024-07-18', 'system_fingerprint': 'fp_72ed7ab54c', 'finish_reason': 'stop', 'logprobs': None}, id='run-c83d6436-b888-43a7-b625-419f61a1caeb-0', usage_metadata={'input_tokens': 102, 'output_tokens': 44, 'total_tokens': 146, 'input_token_details': {'audio': 0, 'cache_read': 0}, 'output_token_details': {'audio': 0, 'reasoning': 0}})]One limitation of this approach is that the number of input tokens increases as our conversation continues. Ways to handle this include replacing old messages with message summaries or simply deleting them.

SQLbot

In the remainder of this post, I will demo a chatbot I built using LangChain tools and messages. The chatbot is a command line utility called SQLbot. To get started with SQLbot, install the Python package from Github.

pip install git+https://github.com/AndrewCarr24/sqlbot.git

SQLbot is a simple chatbot with memory hooked up to a tool for converting prompts to SQL queries, running the queries on a local database, and returning the query results to the user. Before using the tool, you need to put a database in the project directory. The repo https://github.com/AndrewCarr24/sqlbot.git includes an example database in the input_data folder. This database, chinook.db, is a set of interconnected tables with data on albums, musical artists, tracks, customers, and invoices of album purchases. Download this db, open the terminal, and navigate to the directory where the db is located.

start_bot_tool --db chinook

The start_bot_tool commands starts the SQLbot and the chinook argument connects the bot to chinook.db. The LLM that this bot uses is OpenAI’s GPT 4o-mini. Your project directory should have a .env file with your OpenAI API key (OPENAI_API_KEY=[INSERT KEY HERE]). If you do not have this file in your project directory, the bot will ask you to enter a key.

When the bot starts up, you will see these messages on your screen.

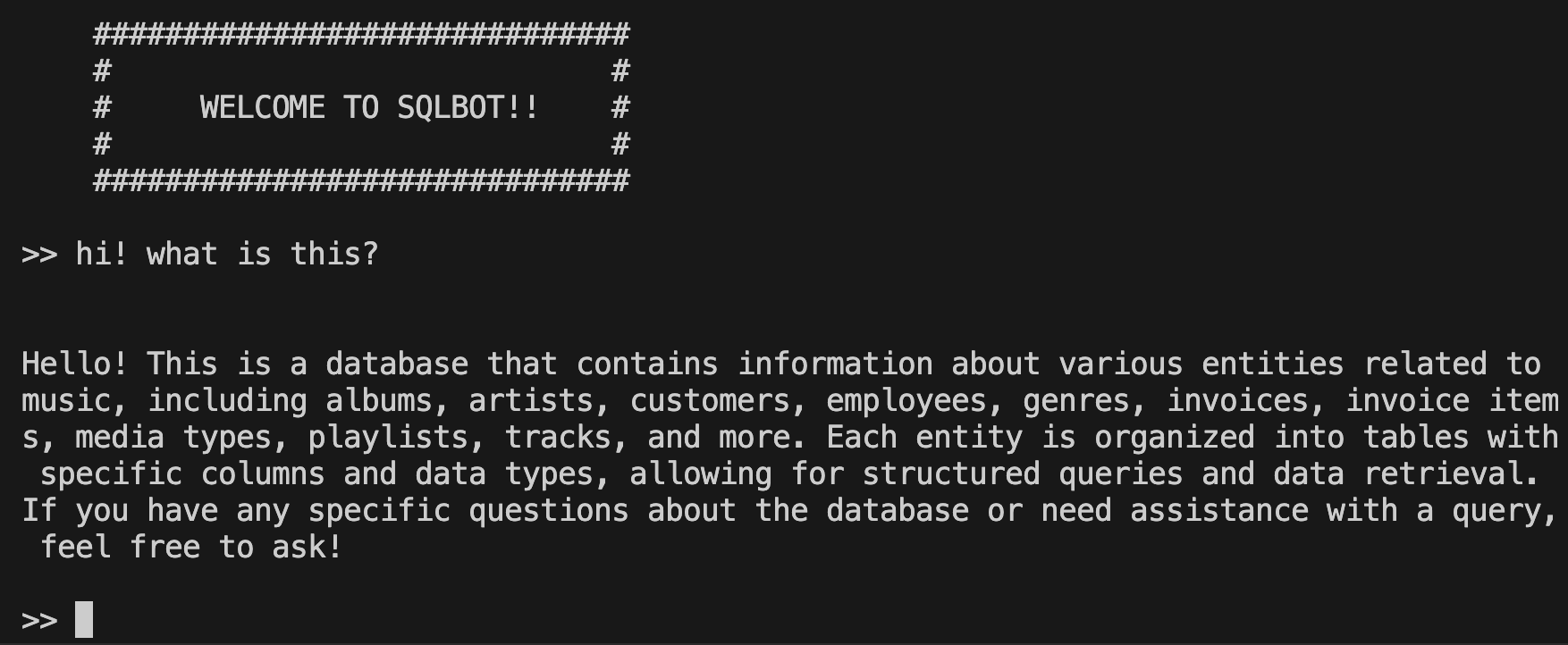

These messages indicate that an initial prompt is being sent to the LLM to collect db metadata. The LLM’s response is then fed into the system prompt so that the bot has some preliminary knowledge about the db upon startup. You can confirm this worked by asking the bot about the db to which it is connected.

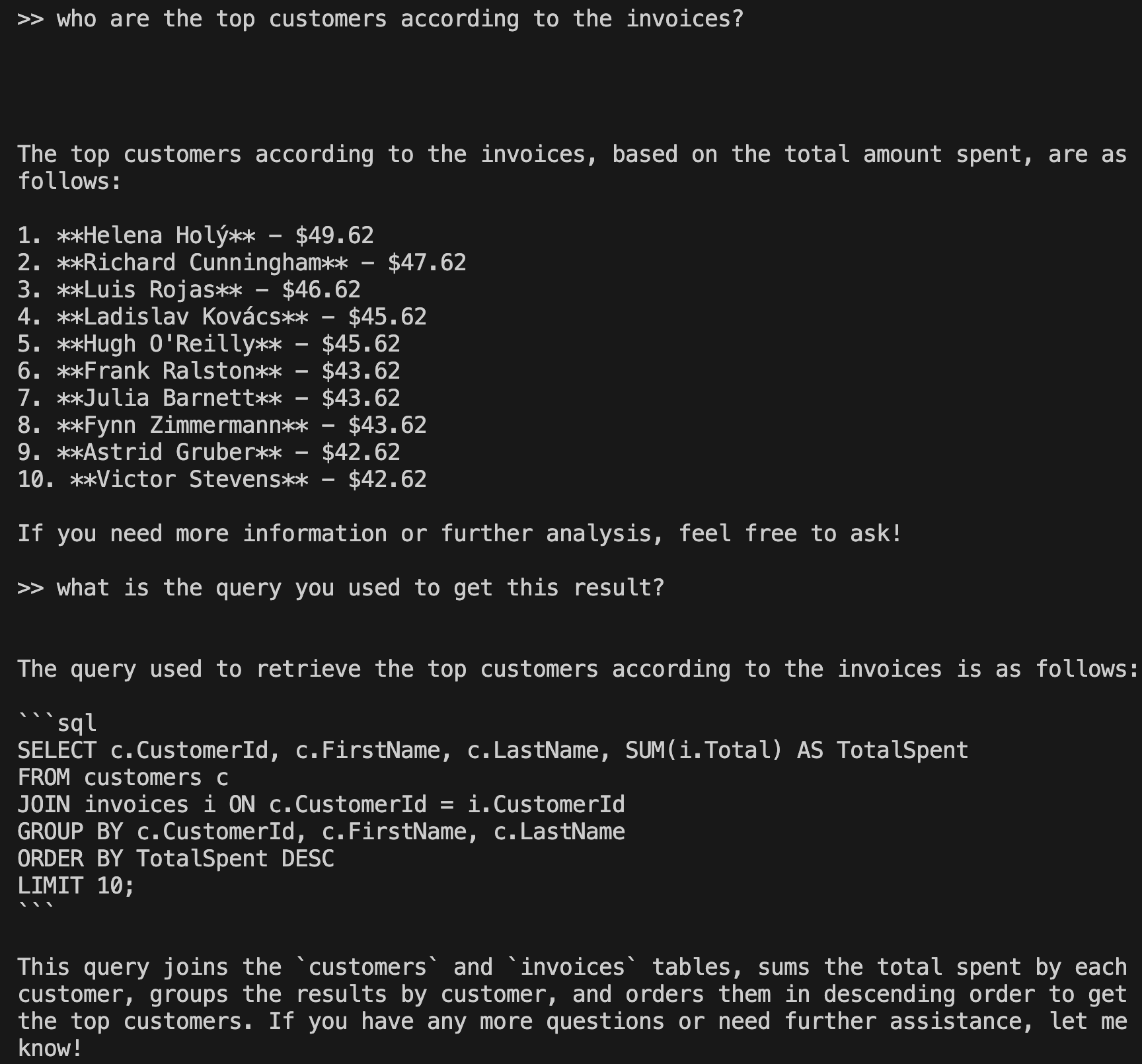

We can then get information on things like the top customers by the amount of money spent on albums. To learn how the LLM arrived it its solution, you can ask for the query it used in a follow-up question.

We can check that SQLbot ran this query by running it with the sqlite3 Python package.

import sqlite3

import pandas as pd

# Connect to your SQLite database (replace 'your_database.db' with your database file)

conn = sqlite3.connect('chinook.db')

# Define your SQL query

query = """

SELECT c.CustomerId, c.FirstName, c.LastName, SUM(i.Total) AS TotalSpent

FROM customers c

JOIN invoices i ON c.CustomerId = i.CustomerId

GROUP BY c.CustomerId, c.FirstName, c.LastName

ORDER BY TotalSpent DESC

LIMIT 10;

"""

# Execute the query and load the results into a DataFrame

df = pd.read_sql_query(query, conn)

# Display the results

print(df) CustomerId FirstName LastName TotalSpent

0 6 Helena Holý 49.62

1 26 Richard Cunningham 47.62

2 57 Luis Rojas 46.62

3 45 Ladislav Kovács 45.62

4 46 Hugh O'Reilly 45.62

5 24 Frank Ralston 43.62

6 28 Julia Barnett 43.62

7 37 Fynn Zimmermann 43.62

8 7 Astrid Gruber 42.62

9 25 Victor Stevens 42.62This matches the result that SQLbot gave us. Type /e to exit the SQLbot utility.

This concludes my overview of SQLbot. For a deeper understanding of how I built this tool, check out the src/sqlbot folder of the Github repo (github.com/AndrewCarr24/sqlbot). The run_from_terminal.py script is the implementation of SQLbot as a command line tool. sqlbot.py in the chatbot folder has the code for using a LangChain chat model with tools and memory.